Survey: 65% Use AI Legal Advice, But Accuracy Concerns Remain

New data reveals how Americans use AI for legal questions, why they still rely on lawyers, and what law firms can do now to build safer AI-ready workflows.

Nearly two-thirds of Americans (65%) have already turned to an AI chatbot for legal help. However, this surge in usage has a clear limit. Our new survey reveals that consumers view AI as a convenient research tool, not a replacement for human counsel.

While users trust AI to explain legal terms, that confidence falters when the stakes rise. For high-consequence issues like criminal or family law, human expertise remains the overwhelming preference.

This signals a division of labor rather than a competition. While convenience drives adoption of AI tools, fears regarding accuracy and security create a ceiling for this emerging technology.

Our study maps those boundaries, exploring the resistance to high-stakes legal automation and how firms can use AI for efficiency while keeping human judgment at the center.

Key Takeaways

- 39% of Americans say AI is good for early research, but that a lawyer should handle legal decisions when issues arise.

- 41% of adults say they would only rely on a lawyer — not AI — to learn about serious matters like criminal accusations, divorce, or immigration.

- 46% of people who have not used AI for legal advice cite concerns about accuracy or misinformation as a reason they have stayed away.

- Among those who have used AI for legal help, 18% say convenience/accessibility is their primary reason, compared with just 8% who cite that it’s cheaper than consulting a lawyer.

Americans Are Using AI in a Support Role for Legal Matters

While 65% of Americans report having used an AI chatbot for legal purposes, this high adoption rate masks a specific boundary. Consumers are not outsourcing their legal problems to machines. Instead, they’re utilizing legal AI tools primarily for low-stakes information gathering.

The data reveals that comfort levels peak when AI functions as a reference tool. 43% of respondents feel comfortable using AI to clarify legal terms, while 38% use it to research general rights or gather information before consulting a professional (respectively).

We see a similar pattern emerging in the industry. Rev’s 2025 Legal Tech Survey found that 48% of legal professionals have incorporated AI into their daily research workflows. While consumers and lawyers operate with different motivations, both groups are currently utilizing AI primarily for preparation rather than decision-making.

For consumers, trust drops the moment the task shifts from research to execution. Only 29% of people feel comfortable trusting AI to draft even a simple contract or agreement. This distinction highlights that while Americans view AI as a capable assistant for research, they remain hesitant to trust it with the final output.

Consumers Prioritize Speed and Access Over Cost Savings With Legal AI Use

A common assumption is that clients turn to AI solely to avoid legal fees, but the data tells a different story. Only 8% of users cite "lower cost than consulting a lawyer" as their primary reason for using these tools.

Instead, the dominant drivers are speed and access. When asked why they use AI, 18% point to convenience and accessibility. Another 15% cite the need for quick guidance or faster answers than waiting for a professional.

This drive for autonomy is strong. Nearly 23% of respondents indicate they would use AI to prepare for self-representation in court, handling tasks like gathering facts or drafting statements.

However, this accessibility carries a hidden price tag. We’ve already seen the risk of hallucinations materialize in federal court, where lawyers in Mata v. Avianca were sanctioned $5,000 for submitting fake, AI-generated case citations.

For consumers acting as their own counsel, these risks are even bigger. While the upfront cost of using a chatbot is zero, the 'backend' cost of acting on a hallucination can be catastrophic.

Without the safety net of legal training to spot a hallucination, a consumer submitting a fake citation doesn't just risk a fine. They risk losing their case, their housing, or their custody rights entirely.

Consumers Draw the Line at High-Stakes Legal Issues

While consumers value the speed of AI, they don’t prioritize it over accuracy when their legal rights are at risk. Human judgment remains the non-negotiable standard for high-stakes matters. This caution is understandable given that nearly 40% of respondents admit they are unsure if AI tools even get their information from official legal sources.

Our survey found that 41% of Americans reject AI entirely for high-stakes legal matters, stating they would only rely on a lawyer to learn about issues such as criminal accusations, divorce, or immigration. Even among those open to using AI, comfort levels plummet for these serious topics. Only 7% would trust AI to learn about criminal charges, and just 6% would feel comfortable using it to learn about family law matters.

Where comfort does exist, it’s confined to administrative or financial disputes. Americans showed comparatively higher openness to using AI to learn about employment disputes (15%) and landlord-tenant disagreements (13%).

This reinforces a clear functional divide: People are viewing AI as a tool to learn about legal concepts, not to defend their liberty in a courtroom.

Trust Issues Persist Around Accuracy and Privacy

High usage has not translated into blind faith. Only 20% of Americans say they trust AI "a great deal" for legal advice. By contrast, 34% trust it only for very simple or general information, reinforcing the idea that consumers see AI as a starting point rather than an authority.

For those who reject the technology entirely, accuracy is the primary hurdle. Among people who have not used AI for legal help, 46% cite concerns about misinformation as their main reason for staying away.

This fear is practical, not theoretical. For example, a consumer incorrectly advised on a lease termination could face eviction rather than just a simple correction.

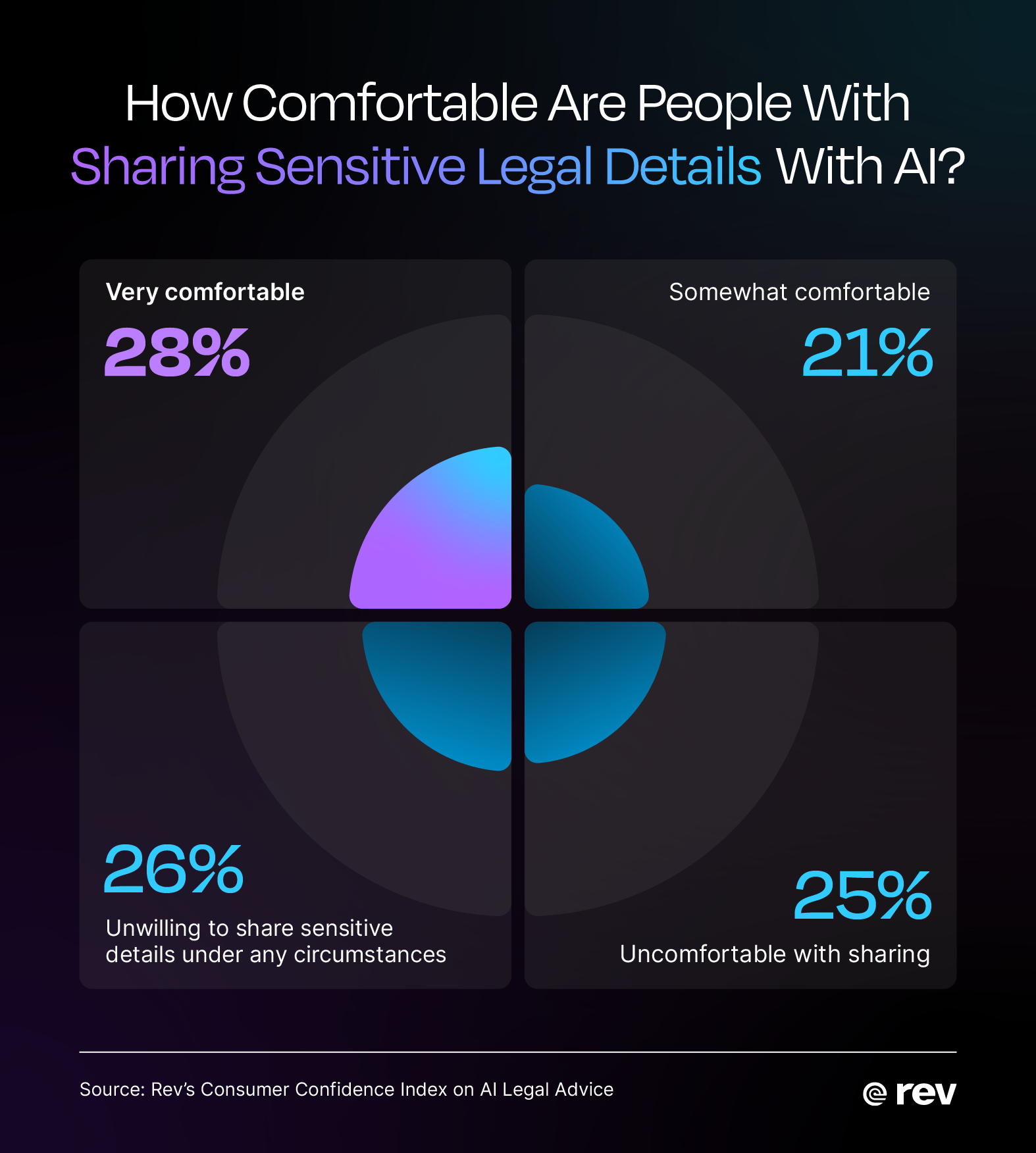

Privacy concerns are equally entrenched. When asked to identify the single biggest risk of using AI for legal advice, 20% pointed to privacy and data security. This fear creates a hard ceiling on adoption, with 25% of users stating they would not share sensitive details with a chatbot under any circumstances.

This skepticism offers a practical opportunity for legal professionals. Firms can address these fears directly by validating the accuracy of their tools and adopting secure, private models. Lawyers can turn consumer anxiety into professional trust by explicitly telling clients that their data never trains public AIs and that every output is verified by a human expert.

How to Build a Client-Centered AI Foundation

This survey data uncovers a distinct message for the legal industry. Clients prioritize speed and accessibility, yet they firmly reject replacing human counsel with algorithms for high-stakes matters. This indicates that the most effective strategy for firms is to use technology to accelerate their internal workflows rather than their advice.

Success requires distinguishing between two types of AI. Open, generalist chatbots are trained on broad public data. This makes them versatile but risky for confidentiality. In contrast, closed-loop models are purpose-built for the legal industry to keep data isolated.

While public tools used by law firms can jeopardize attorney-client privilege through data leaks, a closed-loop system like Rev operates as a secure engine of record.

By focusing on capturing verbatim truth through transcription rather than generating novel legal advice, Rev addresses the accuracy concerns that keep 46% of consumers away from AI by focusing on cited evidence tied to the source rather than generating novel text. This approach also solves the primary hesitation for legal professionals who are equally wary of integrating tools that might hallucinate or fabricate details.

For law firms, this data reveals a clear opportunity. Clients are using AI because they want answers faster. But lawyers often face outdated processes and administrative burdens that make immediate responses difficult.

By using Rev to automate transcription and evidence organization, firms can remove these bottlenecks to deliver the speed clients expect. This allows legal teams to improve efficiency without outsourcing their judgment to a machine.

Implementing AI for law firms isn't about replacement; it's about using the right tools to validate the truth.

Methodology

The survey of 1,002 adults ages 18 and over was conducted via SurveyMonkey for Rev between December 2-3, 2025. Data is unweighted and the margin of error is approximately +/-3% for the overall sample with a 95% confidence level.